0 - Overview

This blog dicusses a production setup I made for a small-scale website on AWS. I mainly want to talk about the security configuration in the system and how the modules communicate with each other.

Background

This project was part of my coursework for COMP90082 (Software Project) at the University of Melbourne. Our team of five built a cleaner management and job allocation system for a real cleaning company (not a mock assignment). The project included a mobile app that enabled cleaners to view and submit jobs, as well as a web-based platform for administrators to manage assignments.

I was responsible for all deployment and AWS-related tasks within the team. At the end of the project, I handed over the system to the client by migrating all components from my AWS account to the client’s account.

Why it is a production setup

I implemented many improvements in the production setup compared to our simple development setup, focusing on enhanced security and scalability. For example, there was no https in our dev deployment. Also many services were publicly-facing to faciliate development and it’s not a good practice.

!!! Cost constraints

The client wants to keep costs as low as possible, so most of the setup I used are within the free tier. I couldn’t do something like placing stuff in a private subnet and using a VPN to connect to them.

1 - Overall architecture

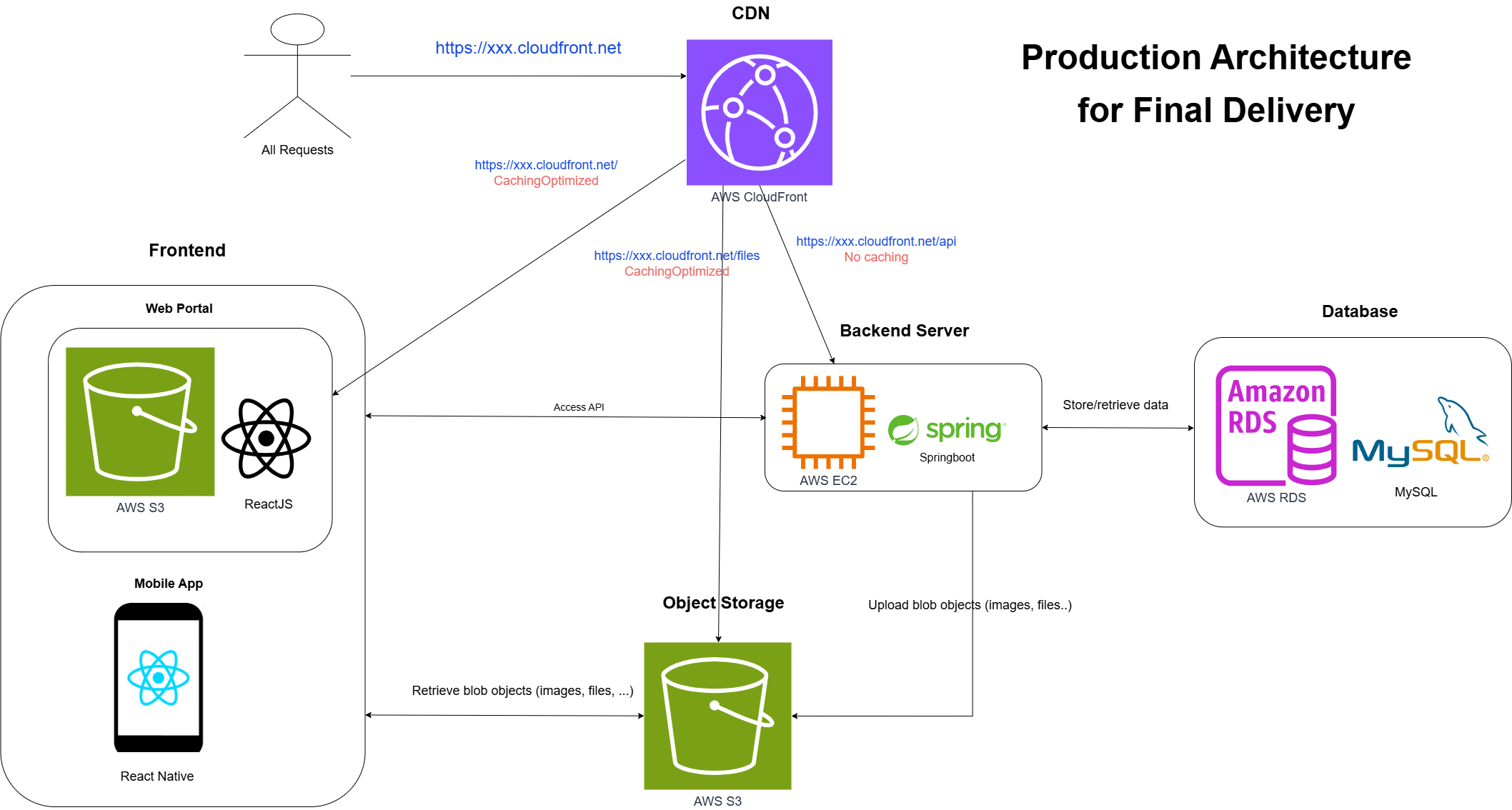

This image shows the overall architecture of the system. Nothing fancy here—just a standard frontend-backend-database-object_storage setup. I won’t dive into the deployment details of each component since they’re pretty straightforward. My main focus is on security and routing configurations.

Here is a brief overview:

- CloudFront CDN: the only public endpoint

- Frontend-Web: ReactJS on S3

- Frontend-Mobile: React Native

- Backend: Springboot on EC2

- Database: MySQL on RDS

- Object Storage: S3

2 - CloudFront CDN

Purpose

This is definitely the coolest service on AWS. It basically fulfilles all my extra need for a production setup compare to a dev one. It provides:

- HTTPS: Provides HTTPS endpoints for all modules, a must-have feature. And I don’t need to worry about SSL certificates.

- Routing: Groups all modules under the same domain. I don’t have a domain so can’t use Route 53 for routing. CloudFront is the substitute.

- Endpoint: Serves as the only public endpoint in the system, keeping all other modules hidden behind it.

- Caching/Performance: Finally, the orignal purpose of a CDN.

How it route traffic

/api: It routes traffic to my EC2

- Cache policy:

CachingDisabled(dynamic API should not be cached in my use case) - Origin request policy:

AllViewer(some of my API requests contain query string. By default, CloudFront doesn’t forward query string to the origin, so I need to set this policy. I don’t want CloudFront to remove anything in http requests sent to my API endpoint) - Origin access policy: not applicable since it’s a public endpoint. I will protect this resource through security group inbound rules. (see EC2 section for details)

/files: It routes traffic to my object storage s3 bucket

- Cache policy:

CachingOptimized(it should be cached) - Origin request policy:

Default - Origin access policy: enabled (see the object storage section for s3 bucket policy)

/: It routes traffic to my frontend s3 bucket

- cache policy:

CachingOptimized(it should be cached) - Origin request policy:

Default - Origin access policy: not applicable since I am using s3 static website endpoint. (see the frontend section for details)

3 - Backend Server in EC2

Overview

I have a springboot server in EC2 running on port 8080. This port should only be accessible by CloudFront to ensure security.

It also needs to connect to RDS. This setting is to be configured on RDS side.

In addition, the backend server will upload files to the object storage s3 bucket. So, it needs the IAM access to the s3 bucket.

Security Group - Inbound Rules

- TCP - 8080 -

com.amazonaws.global.cloudfront.origin-facing(this is a special AWS-managed prefix list that contains all CloudFront edge locations’ IPs and allows their inbound traffic. check [this guide]) - TCP - 22 - My own IP (for SSH access)

IAM

IAM role for EC2 to access S3 bucket.

4 - Database in RDS

Overview

The database should only be accessible by the backend server in EC2 and my own IP address

Security Group - Inbound Rules

- TCP - 3306 - Reference to EC2’s security group, to make sure EC2 can access RDS (this is a great feature provided by AWS as I don’t need to hard-code EC2’s IP address)

- TCP - 3306 - My own IP

5 - Object Storage in S3

Overview

The object storage’s REST API should only be accessible by CloudFront. No traffic should go directly to this S3 bucket.

Configuration

- Block all public access:

enabled - Bucket policy that allows CloudFront access

{

"Version": "2008-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

{

"Sid": "AllowCloudFrontServicePrincipal",

"Effect": "Allow",

"Principal": {

"Service": "cloudfront.amazonaws.com"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::<bucket name>/*",

"Condition": {

"StringEquals": {

"AWS:SourceArn": "<CloudFront distro ARN>"

}

}

}

]

}

6 - Frontend in S3

Overview

Similar to object storage’s S3, for frontend’s S3, the best approach is also to use S3 as origin and let CloudFront handle all the traffic.

Two popular setups are:

- Do exact the same as what I did for object storage. Make s3 bucket private and only allow CloudFront access through Origin Access Control and bucket policy. In this case, the origin in CloudFront would point to s3

REST API Endpoint. e.g.https://<bucket-name>.s3.<region>.amazonaws.com/ - Make S3 bucket public and enable static website hosting. In this case, we allow CloudFront’s sole access through Referer header. The idea is that we let cloudfront add a custom header with a secret value when it forwards the request to S3. Then S3 checks the header and only allows the request if the header is correct. In this case, although S3 is public, only CloudFront can access it still. Another key difference between this option and the first option is that the CloudFront would point to s3’s

Static Website Endpointinstead ofREST API Endpoint. TheStatic Website Endpointis something likehttp://<bucket-name>.s3-website.<region>.amazonaws.com

Read more about all possible options [here].

Among these two, based on my research and previous experience, the first option is definitely more popular, secure and recommended. I only came across one article that suggested doing the second, but the author’s explanation is pretty vague. The article supporting the second option can be found [here].

What I did

Although the first method would be the best, what I did is a downgrade version of the second method - I made the S3 bucket public, enabled static website hosting and let CloudFront access this public endpoint. I didn’t set up the Referer header check so the S3 website endpoint is publicly accessible as well.

I chose this approach because the website isn’t fully developed, and the AWS setup might still change. Having a direct-access S3 endpoint as a backup is beneficial for future testing purpose. Plus, there doesn’t seem to be any major security concern with this setup. Even if a mal user finds the S3 endpoint, there isn’t much they can do. I feel like EC2 and RDS are more critical targets if misconfigured.

7 - Conclusion

Summary of the setup

- CloudFront is the only legit public endpoint in the system. It routes traffic to all other modules.

- EC2: only accessible by CloudFront and my own IP. It is hidden.

- RDS: only accessible by EC2 and my own IP. It is hidden.

- Object Storage S3: only accessible by CloudFront. It is hidden.

- Frontend S3: theroetically it should be hidden, but I made it public for now as a backup endpoint. All normal requests still go through CloudFront.

Thank you for reading 😊.